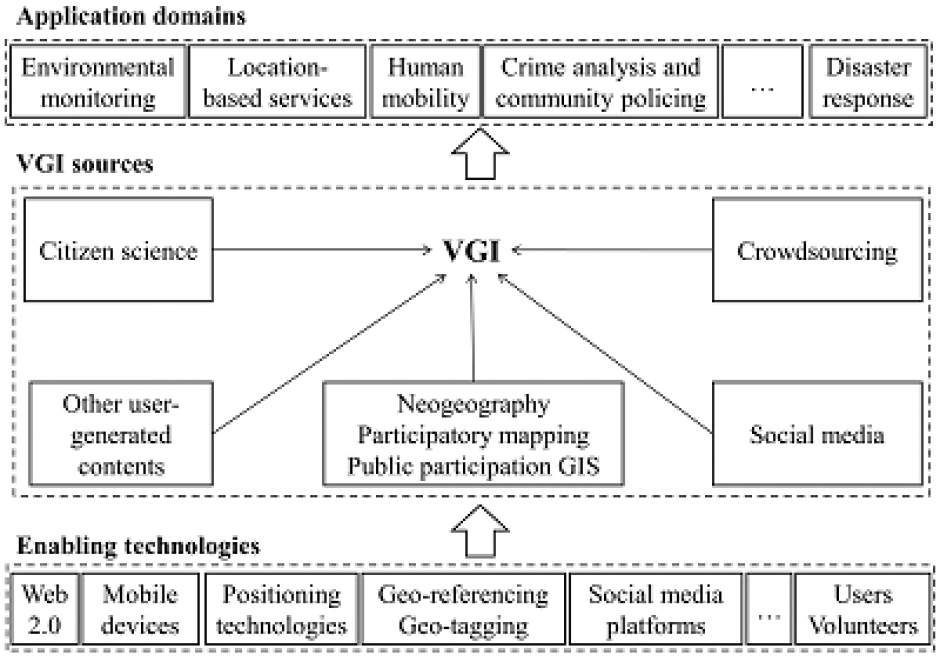

DC-29 - Volunteered Geographic Information

Volunteered geographic information (VGI) refers to geo-referenced data created by citizen volunteers. VGI has proliferated in recent years due to the advancement of technologies that enable the public to contribute geographic data. VGI is not only an innovative mechanism for geographic data production and sharing, but also may greatly influence GIScience and geography and its relationship to society. Despite the advantages of VGI, VGI data quality is under constant scrutiny as quality assessment is the basis for users to evaluate its fitness for using it in applications. Several general approaches have been proposed to assure VGI data quality but only a few methods have been developed to tackle VGI biases. Analytical methods that can accommodate the imperfect representativeness and biases in VGI are much needed for inferential use where the underlying phenomena of interest are inferred from a sample of VGI observations. VGI use for inference and modeling adds much value to VGI. Therefore, addressing the issue of representativeness and VGI biases is important to fulfill VGI’s potential. Privacy and security are also important issues. Although VGI has been used in many domains, more research is desirable to address the fundamental intellectual and scholarly needs that persist in the field.

DM-70 - Problems of Large Spatial Databases

Large spatial databases often labeled as geospatial big data exceed the capacity of commonly used computing systems as a result of data volume, variety, velocity, and veracity. Additional problems also labeled with V’s are cited, but the four primary ones are the most problematic and focus of this chapter (Li et al., 2016, Panimalar et al., 2017). Sources include satellites, aircraft and drone platforms, vehicles, geosocial networking services, mobile devices, and cameras. The problems in processing these data to extract useful information include query, analysis, and visualization. Data mining techniques and machine learning algorithms, such as deep convolutional neural networks, often are used with geospatial big data. The obvious problem is handling the large data volumes, particularly for input and output operations, requiring parallel read and write of the data, as well as high speed computers, disk services, and network transfer speeds. Additional problems of large spatial databases include the variety and heterogeneity of data requiring advanced algorithms to handle different data types and characteristics, and integration with other data. The velocity at which the data are acquired is a challenge, especially using today’s advanced sensors and the Internet of Things that includes millions of devices creating data on short temporal scales of micro seconds to minutes. Finally, the veracity, or truthfulness of large spatial databases is difficult to establish and validate, particularly for all data elements in the database.