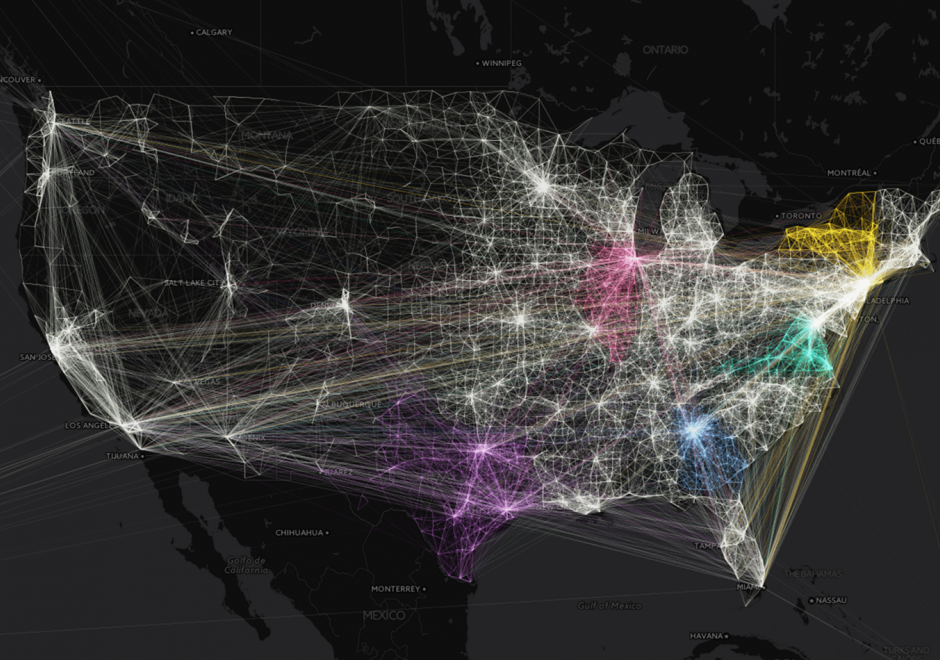

AM-94 - Machine Learning Approaches

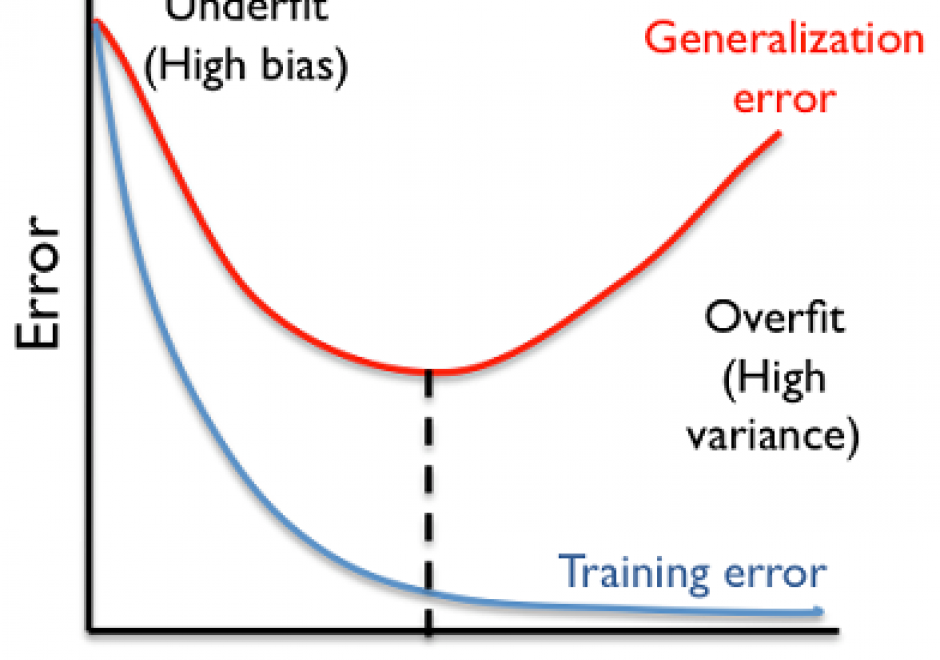

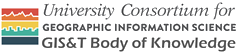

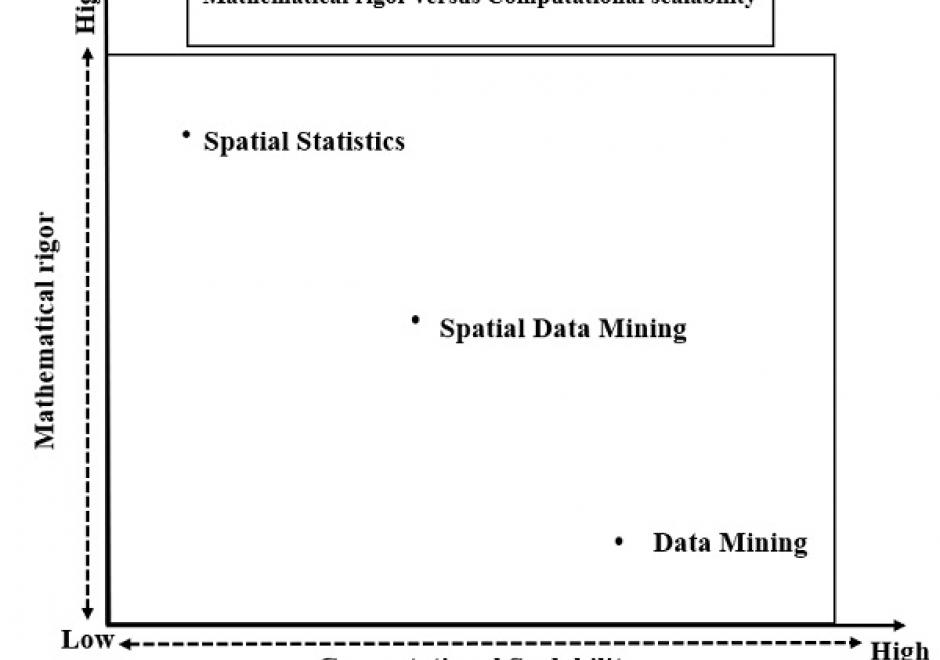

Machine learning approaches are increasingly used across numerous applications in order to learn from data and generate new knowledge discoveries, advance scientific studies and support automated decision making. In this knowledge entry, the fundamentals of Machine Learning (ML) are introduced, focusing on how feature spaces, models and algorithms are being developed and applied in geospatial studies. An example of a ML workflow for supervised/unsupervised learning is also introduced. The main challenges in ML approaches and our vision for future work are discussed at the end.

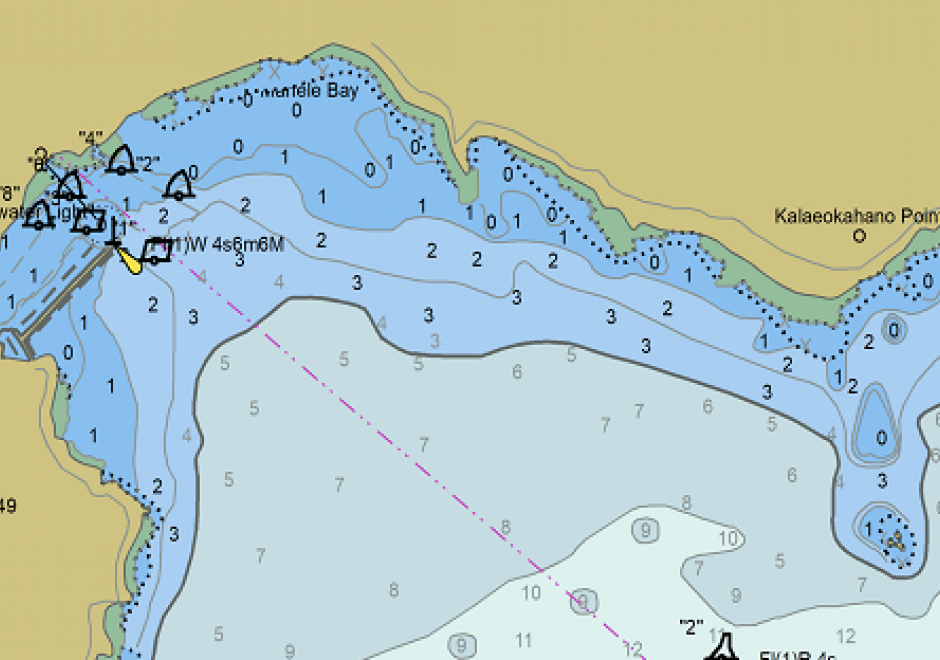

DM-85 - Point, Line, and Area Generalization

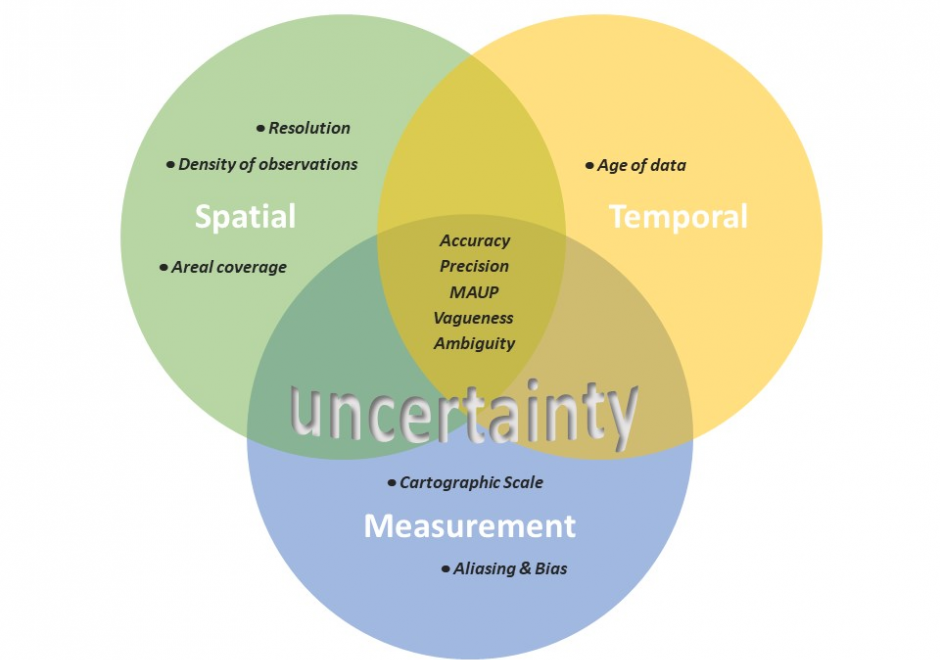

Generalization is an important and unavoidable part of making maps because geographic features cannot be represented on a map without undergoing transformation. Maps abstract and portray features using vector (i.e. points, lines and polygons) and raster (i.e pixels) spatial primitives which are usually labeled. These spatial primitives are subjected to further generalization when map scale is changed. Generalization is a contradictory process. On one hand, it alters the look and feel of a map to improve overall user experience especially regarding map reading and interpretive analysis. On the other hand, generalization has documented quality implications and can sacrifice feature detail, dimensions, positions or topological relationships. A variety of techniques are used in generalization and these include selection, simplification, displacement, exaggeration and classification. The techniques are automated through computer algorithms such as Douglas-Peucker and Visvalingam-Whyatt in order to enhance their operational efficiency and create consistent generalization results. As maps are now created easily and quickly, and used widely by both experts and non-experts owing to major advances in IT, it is increasingly important for virtually everyone to appreciate the circumstances, techniques and outcomes of generalizing maps. This is critical to promoting better map design and production as well as socially appropriate uses.