DM-11 - Hierarchical data models

- Illustrate the quadtree model

- Describe the advantages and disadvantages of the quadtree model for geographic database representation and modeling

- Describe alternatives to quadtrees for representing hierarchical tessellations (e.g., hextrees, rtrees, pyramids)

- Explain how quadtrees and other hierarchical tessellations can be used to index large volumes of raster or vector data

- Implement a format for encoding quadtrees in a data file

DC-19 - Ground Verification and Accuracy Assessment

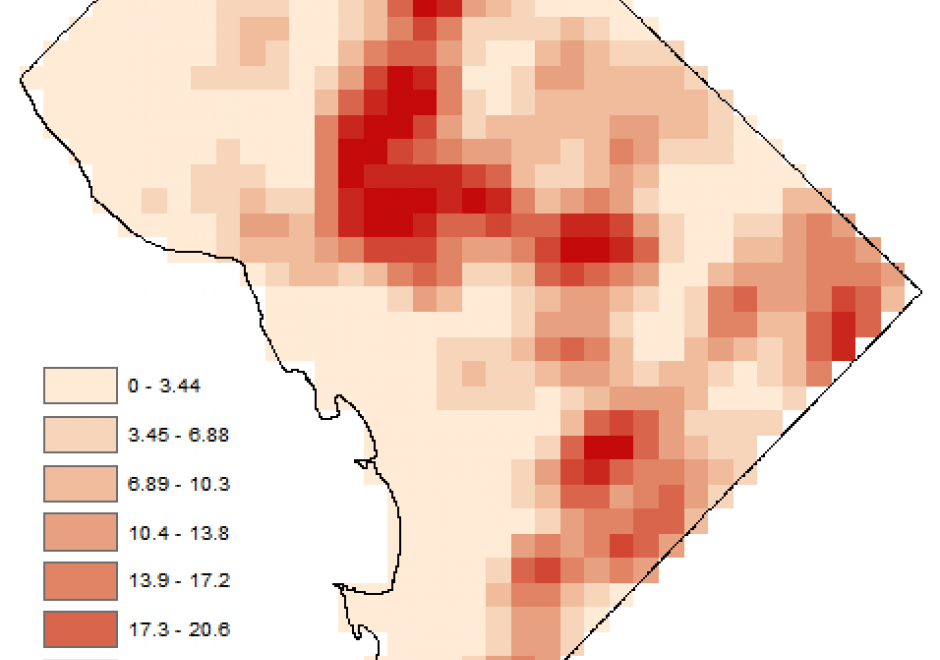

Spatial products such as maps of land cover, soil type, wildfire, glaciers, and surface water have become increasingly available and used in science and policy decisions. These maps are not without error, and it is critical that a description of quality accompany each product. In the case of a thematic map, one aspect of quality is obtained by conducting a spatially explicit accuracy assessment in which the map class and reference class are compared on a per spatial unit basis (e.g., per 30m x 30m pixel). The outcome of an accuracy assessment is a description of quality of the end-product map, in contrast to conducting an evaluation of map quality as part of the map production process. The accuracy results can be used to decide if the map is of adequate quality for an intended application, as input to uncertainty analyses, and as information to improve future map products.